Leveraging AI for Automated Test Case Generation

The art of writing effective test cases comes with the ability to understand and decode the feature specification documents (PRDs) and UI mockups.

Besides the ability to understand the features and the product, one needs to be able to understand the real time scenarios, how users interact with the product, analyse and identify the key hindering factors on the product to write more comprehensive test cases.

Let's decode the process of test case writing

- Read through the PRDs and mockups.

- Identify the feature based scenarios.

- Identify the dependent/regression scenarios.

- Define the template for the test cases - this depends on the complexity of the application, how the validations are performed, how the test data is managed and the test case management tools used.

- Talk to PMs, QAs from other teams and Devs to identify dependencies between the features / components and add more test cases.

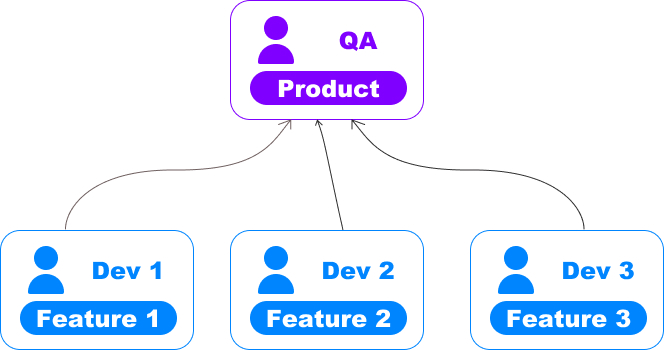

QA Engineers carry the knowledge base of the entire product, they understand which new features could potentially impact the existing product workflows and thereby identify the points of regression.

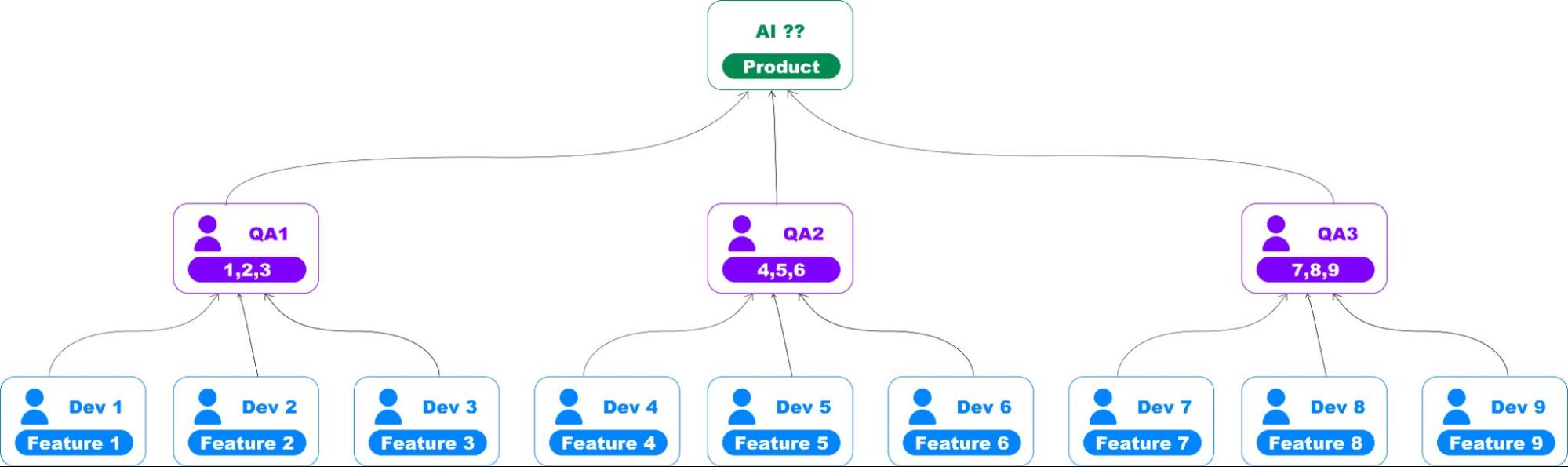

But when the team keeps growing, this is not scalable. A single QA engineer or team cannot have the entire knowledge of how an application with multiple workflows would behave. Can AI be leveraged here to store the user flows and product behaviours as the team scales?

Let's explore if AI tools can enable teams to achieve all the steps in test case authoring. More specifically, if free Gen AI tools like ChatGPT can be used for test generation.

I've conducted an experiment using ChatGPT to generate test cases while developing DevAssure's Desktop App.

Below are some of the trials and results.

Trial 1 - Simple prompt

"I am a QA Engineer trying to write test cases for a feature. Here are the details - “”, help me write the test cases for the same. Display the test cases in a tabular form."

Output

| ID | Description | Steps | Expected Outcome | Pass/Fail Criteria |

|---|---|---|---|---|

| TC01 | Select New Web Test Case | 1. Open the app. 2. Navigate to the home screen. 3. Click on 'Select New Web Test Case'. | 'Create Web Test Case' screen should be displayed. | Screen transition is successful. |

| TC02 | Enter valid test case name | 1. Follow steps to TC01. 2. Enter a valid name in the 'Web Test Case Name' field. 3. Click 'Create'. | New web test case is created with the provided name. | Test case creation is successful. |

| TC03 | Enter empty test case name | 1. Follow steps to TC01. 2. Leave the 'Web Test Case Name' field empty. 3. Click 'Create'. | Error message should be displayed indicating name is required. | Appropriate error is displayed. |

| TC04 | Enter excessively long test case name | 1. Follow steps to TC01. 2. Enter a name exceeding the maximum length limit in the 'Web Test Case Name' field. 3. Click 'Create'. | Error message should be displayed indicating name is too long. | Appropriate error is displayed. |

| TC05 | Use special characters in test case name | 1. Follow steps to TC01. 2. Enter a name with special characters in the 'Web Test Case Name' field. 3. Click 'Create'. | Check if special characters are allowed or an error message is displayed. | Behavior matches expected rules. |

| TC06 | Cancel test case creation | 1. Follow steps to TC01. 2. Enter a valid name. 3. Click 'Cancel' instead of 'Create'. | User should be returned to the previous screen without creating a test case. | Navigation is correct without creation. |

Trial 2 - Prompt to classify and tag tests

"I am a QA Engineer and I want to write functional test cases for a feature. Find the details about the same below. Generate all possible functional test cases. Also identify the P0 scenarios and tag the test cases based on different components.

The test cases should be presented in a tabular format."

Output

| ID | Title | Objective | Preconditions | Test Steps | Expected Result | Priority | Component |

|---|---|---|---|---|---|---|---|

| TC01 | Create Test Case from Home Page | Verify creating a test case from the home page. | User logged in, on home page. | 1. Click "New Web Test Case". 2. Enter name, click "Create". | New test case created, user redirected to editor. | P0 | UI |

| TC02 | Create Test Case from Explorer | Verify creating a test case from the Explorer. | User logged in, on Explorer page. | 1. Navigate to Test Cases. 2. Click "Add new", enter name. | New test case created, user redirected to editor. | P0 | UI |

| TC03 | Enter Test Case Metadata | Verify entering metadata details in the editor. | New test case open in editor. | 1. Enter Summary, Tags, Feature in metadata fields. | Metadata saved and displayed correctly. | High | Functionality |

| TC04 | Create Detailed Test Steps | Verify creation of detailed test steps. | Test case selected, in Detailed Steps tab. | 1. Input actions and expected results in respective columns. | Detailed steps saved and displayed correctly. | P0 | Functionality |

| TC05 | Add Automation Test Steps | Verify adding steps for automation. | Test case selected, in Automation tab. | 1. Click "Add Step", select UI action for automation. | Automation steps added and saved correctly. | High | Functionality |

| TC06 | Edit Test Case Properties | Verify editing properties of a test case. | Test case open in editor. | 1. Update fields like Summary, Tags. Click to save. | Updated properties saved and displayed correctly. | Medium | UI |

Trial 3 - Prompt with PRD and details on existing functionality

More comprehensive test cases were generated.

Output

| ID | Title | Objective | Preconditions | Test Steps | Expected Result | Priority | Component |

|---|---|---|---|---|---|---|---|

| TC01 | Create Test Case from Home Page | Verify creating a test case from the home page. | User logged in, on home page. | 1. Click "New Web Test Case". 2. Enter name, click "Create". | New test case created, user redirected to editor. | P0 | UI |

| TC02 | Create Test Case from Explorer | Verify creating a test case from the Explorer. | User logged in, on Explorer page. | 1. Navigate to Test Cases. 2. Click "Add new", enter name. | New test case created, user redirected to editor. | P0 | UI |

| TC03 | Enter Test Case Metadata | Verify entering metadata details in the editor. | New test case open in editor. | 1. Enter Summary, Tags, Feature in metadata fields. | Metadata saved and displayed correctly. | High | Functionality |

| TC04 | Create Detailed Test Steps | Verify creation of detailed test steps. | Test case selected, in Detailed Steps tab. | 1. Input actions and expected results in respective columns. | Detailed steps saved and displayed correctly. | P0 | Functionality |

| TC05 | Add Automation Test Steps | Verify adding steps for automation. | Test case selected, in Automation tab. | 1. Click "Add Step", select UI action for automation. | Automation steps added and saved correctly. | High | Functionality |

| TC06 | Edit Test Case Properties | Verify editing properties of a test case. | Test case open in editor. | 1. Update fields like Summary, Tags. Click to save. | Updated properties saved and displayed correctly. | Medium | UI |

| TC07 | Edit Automation Steps | Verify editing of automation test steps. | Automation steps exist in a test case. | 1. Navigate to Automation tab. 2. Hover and edit a step. | Changes saved and updated step displayed correctly. | Medium | Functionality |

| TC08 | Delete Automation Test Step | Verify deletion of an automation test step. | Automation step present in a test case. | 1. Select step, click "Delete". | Step deleted and no longer displayed. | High | Functionality |

| TC09 | Test Search and Filter with Tags | Verify searching and filtering test cases using tags. | Multiple test cases with various tags created. | 1. Use tags in search/filter box to find test cases. | Test cases matching tags displayed. | High | UI |

| TC10 | Copy Automation Test Step | Verify ability to copy an automation test step. | At least one automation step present. | 1. Select step, click "Copy", then paste to desired location. | Copied step displayed correctly in new location. | Medium | Functionality |

| TC11 | Add Comment to Test Step | Verify adding a comment to a test step. | Test step available in the test case. | 1. Select test step, add a comment. | Comment saved and visible next to the test step. | Low | Functionality |

| TC12 | Enable/Disable Test Step (Commenting Test Steps) | Verify ability to enable/disable a test step. | Test step created in test case. | 1. Choose test step, toggle enable/disable. | Test step enabled/disabled as per toggle status. | Low | Functionality |

| TC13 | Move Test Step | Verify moving a test step to a different position. | Multiple steps created in a test case. | 1. Select a test step, move to a new position. | Test step displayed in the new position correctly. | Medium | Functionality |

Trial 4 - Build more test cases on top of Trial 3

6 Additional tests but most of them were duplicates of previous results and there were hallucinations as well.

Output

| ID | Title | Objective | Preconditions | Test Steps | Expected Result | Priority | Component |

|---|---|---|---|---|---|---|---|

| TC14 | Validate Project Initialization | Ensure a project can be properly initialized in the system. | User logged in, on project creation page. | 1. Initiate project setup. 2. Verify initialization steps. | Project is initialized and listed correctly in user dashboard. | High | UI |

| TC15 | Test Case Duplication | Verify that a test case can be duplicated correctly. | Test case exists that can be duplicated. | 1. Select existing test case. 2. Duplicate it. | New test case is a duplicate and contains the same details. | Low | UI |

| TC16 | Enter Test Case Metadata | Verify entering metadata details in the editor. | New test case open in editor. | 1. Enter Summary, Tags, Feature in metadata fields. | Metadata saved and displayed correctly. | High | Functionality |

| TC17 | Create Detailed Test Steps | Verify creation of detailed test steps. | Test case selected, in Detailed Steps tab. | 1. Input actions and expected results in respective columns. | Detailed steps saved and displayed correctly. | P0 | Functionality |

| TC18 | Edit Test Case Properties | Verify editing properties of a test case. | Test case open in editor. | 1. Update fields like Summary, Tags. Click to save. | Updated properties saved and displayed correctly. | Medium | UI |

| TC19 | Load Test Case Efficiency | Test the load efficiency of opening a test case. | User selects a test case to open. | 1. Open test case. 2. Measure load time. | Test case opens within acceptable time limits. | Medium | Performance |

Inferences

- Trial 1 results are not relevant and not useful as there was no complete context of the feature / application.

- Trial 2 results are appropriate, but the test cases are very high level.

- Trial 3 results are accurate, but 14 test cases are not enough to validate the feature.

- Trial 4 results had duplicates from previous results, it required more effort to check and clean the list, and ended up having fewer tests.

- Trial 4 also had results that were not related to the data in the PRD, in other words the model started hallucinating.

There are numerous factors to be considered while using AI to write test cases

- Test cases list should cover all the user flows and negative tests as well.

- PRDs / Spec documents could be huge and might not fit in the LLM’s context window.

- Many UI applications rely on UI mockups in tools like Figma, rather than using PRDs as the source of truth.

- Duplicate test cases should not be created every time AI generates the tests.

- AI should have access to entire application information or existing test cases to create new tests based on the integration or dependent flows.

- AI should have access to test data store / sample data to create test cases with appropriate test data.

Given all these factors, we can leverage Gen AI and build an effective tool that can assist in test cases authoring and test case management.

Will this AI tool replace QA Engineers?

No!, this will help QA engineers to be more productive, and help teams to easily streamline the testing process. It will help engineering teams scale faster, ship faster with better quality.

QA Engineers can start focussing on more impacting activities like exploratory testing, monkey testing, usability validations and let tools like DevAssure do the mundane tasks for them, and such tools can be consumed by anyone on the team - PMs, Developers and QA Engineers and the responsibility of owning quality should and can be shared across all the teams.

DevAssure's Test Case Generation from Figma Mockups and PRDs

DevAssure AI connects seamlessly with Figma and generates feature and regression test cases from specification documents and mockups within a few seconds. Save hundreds of hours of manual work in writing test cases.

🚀 See how DevAssure accelerates test automation, improves coverage, and reduces QA effort.

Ready to transform your testing process?