Flaky Tests | What are Flaky Tests and How to Prevent them ?

UI Automation is always challenging. Some of the reasons include

- Validation of different components and their behaviours

- Validation of structures with dynamic data

- Validation of dynamically changing content

- Validating async operations

And because of the complexity involved in automating the interactions with the components and the data associated with them, the tests are not stable, meaning that they don't fail or pass consistently. With UI Automation, unstable tests are a huge pain, because a lot of manual intervention would be required to check if it's an actual failure and identify why it failed now and not during the previous run, based on this a fix to make the test stable needs to be identified.

What are Flaky Tests?

Flaky tests are tests that fail or pass sometimes even though there are no code changes. Every test run yields a different result. This makes debugging the failure very difficult. This is a common problem in UI automation, and one of the main reasons why quality engineering teams do not rely 100% on UI automated tests.

What causes Tests to be Flaky?

Automation is essential as the number of engineers in the team keep growing and as a result there are new features being developed, or more changes to the existing features. If testing all of these new changes are done manually, this would prolong the release cycle, and the time taken to validate the regression tests would increase exponentially. As more tests are being automated, tests tend to become less stable causing additional overheads in debugging the failed tests.

Some of the common causes of flaky tests in UI automation are -

Poorly Written Locators

In UI Automation, locators help identify and automate interactions with the elements and components on the web page. There are different types of locators

- XPath

- ID

- Classname

- CSS

- Tag Name

Some teams also use different attributes like data-test-id as locators. The choice of locators highly depends on the application and the team. Carelessly chosen locators cause flaky tests. Additionally for applications with dynamic or frequently changing UI elements, locators need to be selected carefully.

Parallel Execution and Concurrency

If there are data dependencies or use case dependencies between multiple tests, running them in parallel and at random will cause the tests to be flaky.

Consider an example where a sign up test and a login test run in parallel. The Login test will pass as long as it runs after the Sign up test, but in a one off case, because of the randomness if the Login test runs before the Sign up test, then it will fail.

Consider another example where 2 tests are accessing the same row in a table in the UI to validate the outcome of the test that is being executed. Because both the tests are accessing the same data, it's likely that one test might be accessing the data while the other one has completed execution causing inconsistency in the data that needs to be validated, again causing the test to fail at times.

Proper test planning for test automation is required to understand how these data dependencies or use case dependencies between multiple tests need to be handled.

Async Operations & Dynamic Waits

When there are async operations, like uploading an asset on youtube and the application requires time for the operation to be completed, an async or a dynamic wait needs to be added. But in some cases, the operation might take longer than expected because of various other factors, causing the test to fail sometimes.

Test Environment Issues

While developing test automation, it would work locally. But when the automated tests are added to the CI/CD pipelines, it becomes unstable and flaky. This could be because of the test environment. There are various factors affecting the stability of the test environment like No of operations being executed, could cause performance degradation causing tests which are being executed in parallel to fail intermittently. Multiple tests updating and removing data, can cause tests to fail intermittently Automated test cases written to break the system (corner cases), can cause other tests to fail intermittently, especially when such tests are being executed in parallel. Browser updates, Web driver updates etc could cause flaky tests.

Ways to Prevent Flaky Tests

Flaky tests become a huge problem when there are 100s of automated tests. While automating UI tests, there are specific processes and implementation methods that can be followed which can greatly improve test stability and thereby prevent flakiness in UI test automation.

Identifying Stable Locators

Based on the application, QA Engineers should identify the stable locators to locate the elements in their application. For instance some react apps can have dynamic IDs, in that case, IDs should not be used as locators. Some apps have auto generated classnames that will dynamically change with every page load, so such classnames should not be used as identifiers. While using XPaths, absolute values should not be used, it would be better to use relative XPaths.

Consider the following example - Building a locator to locate an element in excel cell. The simplest of doing this would be to locate the cell based on column and row count

//table[1]//tr[2]//td[1]

This will become flaky if the number of columns or rows change in the table, causing the test to fail at times. Or if there are more tables added before the current table.

A better way to write this locator would be to Get the id or any unique property of the table Locate the cell within the table based on the value of the cell

//table[@id='customers']//tr//td//span[text()='Google']

Test Planning for UI Automation

Before starting with UI automation, it is important to plan the implementation of the automation scripts. Every test case needs to be independent. Every test case should have its own test data and no test case should consume the data or validate the data being used by another test case.

For instance if there is a test case for Login and one for Sign Up - The Login test case should not use the user details from sign up. Instead for Login the user should be created either in the UI or using APIs or seeding the data directly into the database.

Besides this Test Planning should also be done to decide how the validations need to be done. Some validations can be done through the UI and some through APIs or database.

Shorter Test Cases

Automated scripts should be smaller. This does not mean end to end tests should not be validated. This means that end to end test cases should be validated for different scenarios in Silos and there could be one test case for the entire flow.

Consider an example of an ecommerce application - To validate the checkout Page for different scenarios, UI automation scripts should not be written for every step like searching for items and adding items to cart. These should be handled as independent test cases. For the checkout page validation - Write the automation scripts for Login Call the APIs for adding items to cart (Don't do this through the UI for every test case. The main test case that validates adding items to cart alone can be done through the UI.) Load the cart or the checkout page and do the validations

While doing the validations as well, not all validations need to be done through the UI. This will also help improve the stability of the test. APIs or Database Queries can be used to validate some of the data for the use case under test. During planning and before beginning the scripting of the UI tests, such things need to be considered and this while validating the functionality of the application will also help maintain the stability of the tests. Additionally this will also reduce the test execution time.

Handling Test Data

For every test case the test data should be created or seeded using APIs. And similarly after every test run the data should be purged in the test environment. This will ensure that the test case is always stable and the accumulating data will not overload the test environment causing intermittent failures in tests. Performance and load testing can be handled in a separate test suite and test environment.

Using on Demand test environments

Instead of using an existing test environment, for every PR or for every frequency of test automation a new environment can be created and used. This will also provide the flexibility to deploy the application code that needs to be tested in that environment, thereby reducing the flakiness of tests when multiple automation jobs are being executed at the same time. For spinning up new environments technologies like docker can be used.

How DevAssure prevents Flaky Tests

DevAssure is a test automation platform for UI test cases enabled automation scripting using a no code solution and has a very powerful recorder that enables QA engineers to record the test cases and generate the automation scripts. DevAssure guarantees 99.99 % stability in UI test cases automated using DevAssure.

Learn more about DevAssure's Intelligent Recorder

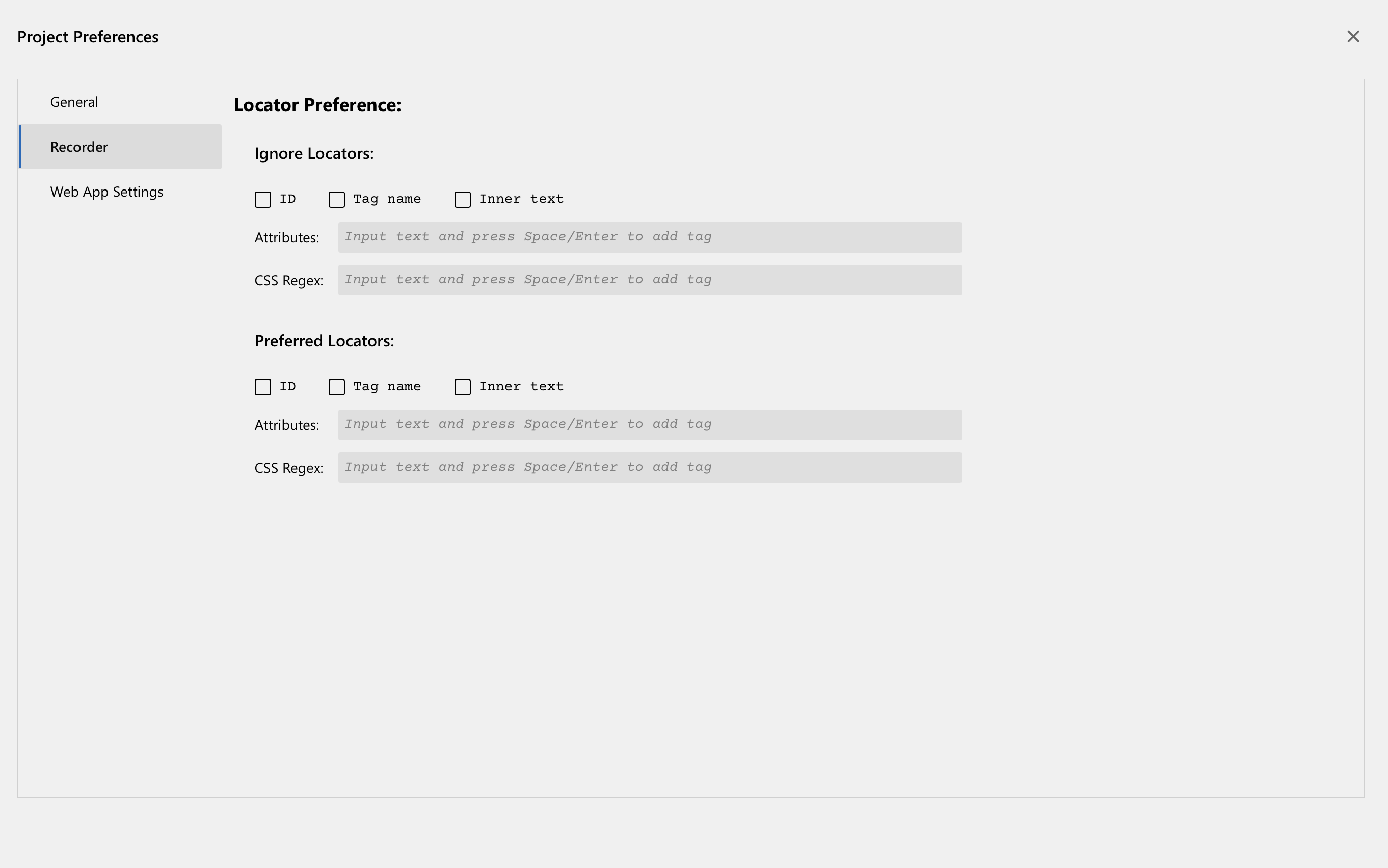

DevAssure enables users to set the locator preferences - how the locators can be chosen - so that the recorder can be trained to pick the locators for the elements in the application based on the preferences set by the user on top of DevAssure's robust algorithm to create locators.

Learn more about DevAssure's Locator Preferences.

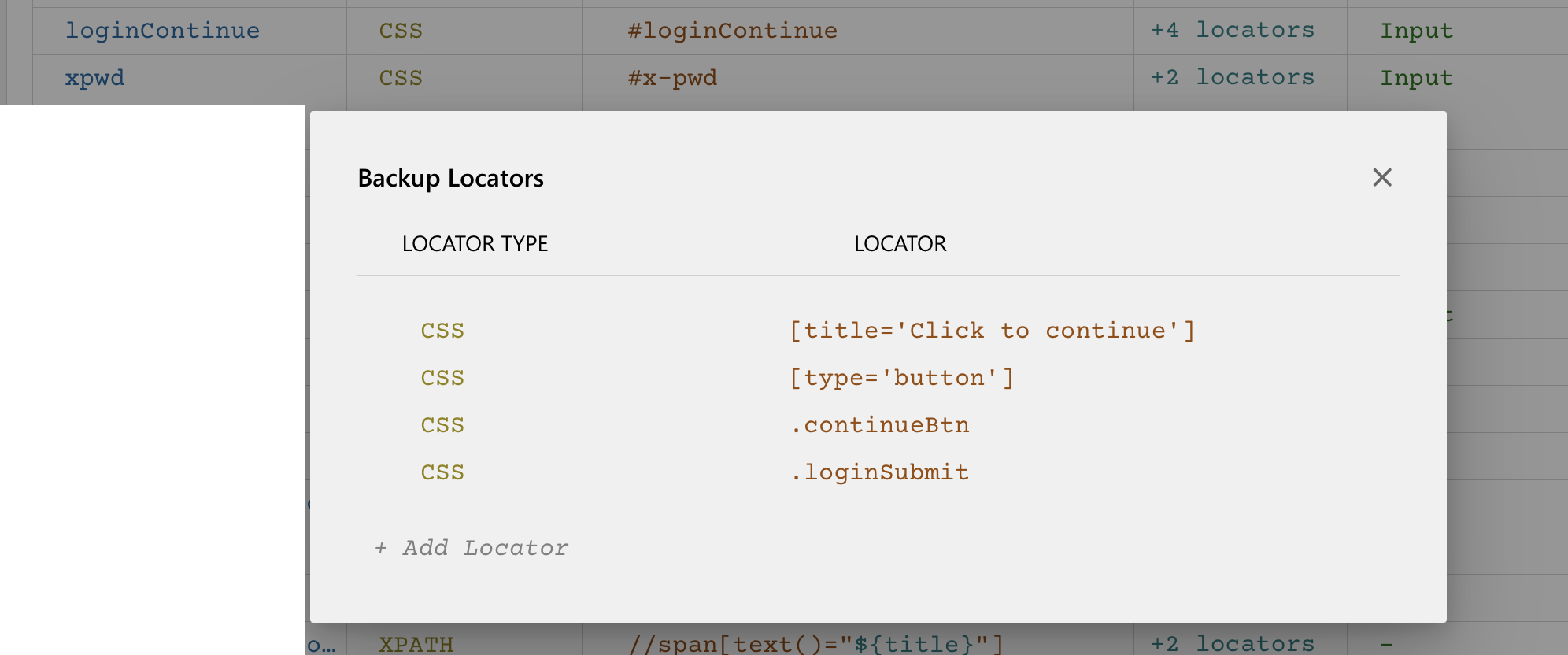

To ensure test stability, DevAssure's intelligent recorder also generates multiple backup locators based on the preferences set. When the web element or component cannot be located with the primary locator, the backup locator will be picked to continue the test execution.

Learn more about DevAssure's backup locators.

DevAssure is a desktop application that facilitates running the test in any environment - cloud, virtual or local. DevAssure's CLI provides the capability to run the tests as a package in any environment as defined by the user. Running DevAssure generated automated tests in docker containers is easy to set up and maintain.

Learn more about DevAssure's CLI

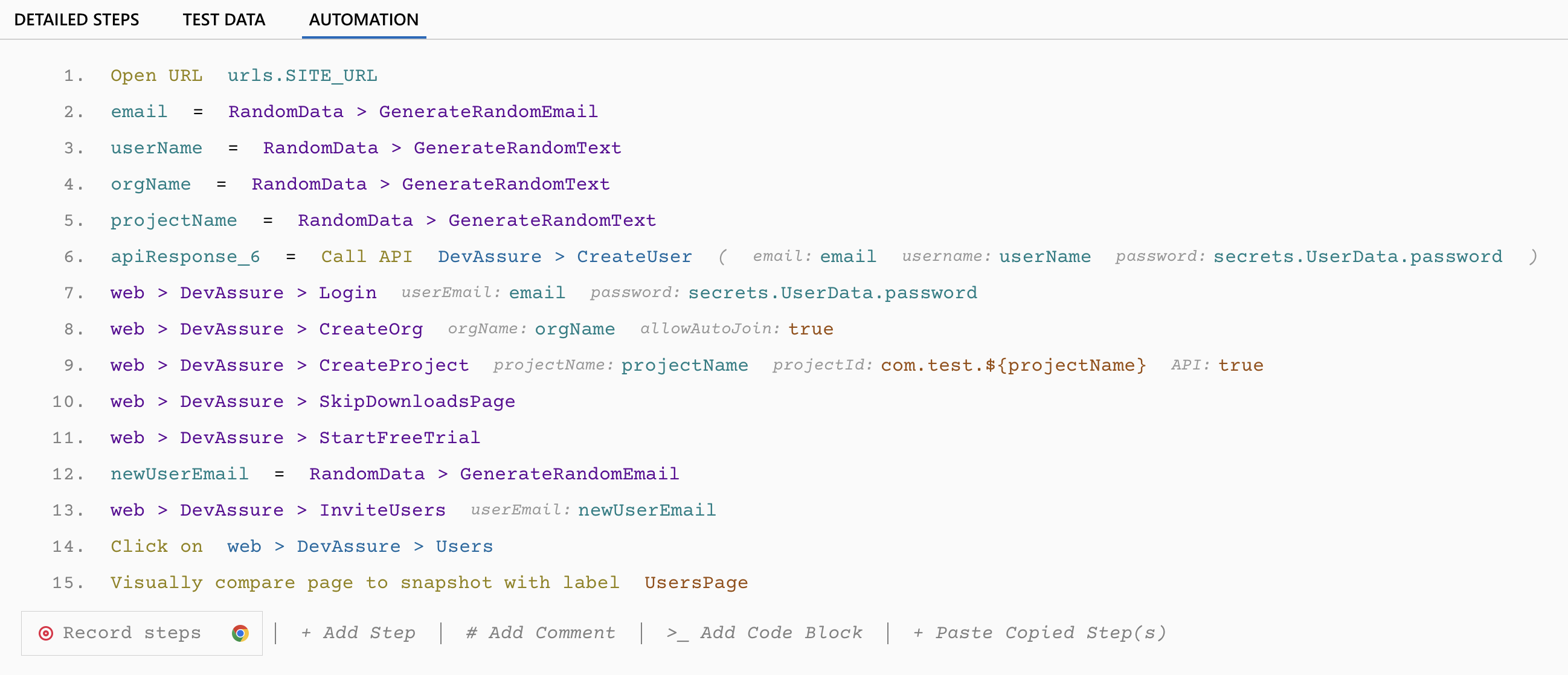

DevAssure provides the ability to interleave API and database calls and validations with the UI Automation test steps, thereby ensuring that UI tests for data preparation and validations are not completely dependent on the UI elements alone.

Learn more about DevAssure's API Test Creation

DevAssure also provides the ability to query data-set from the database, to serve as test data for the automated UI tests.

Learn more about DevAssure's Database support for Test Data management.

The before and after test hooks that are inbuilt into DevAssure provide the ability to create or seed data before test execution and clear data after test execution.

Learn more about DevAssure's Before and After test hooks.

For dynamic waits, async operations and page redirections, DevAssure provides the ability to configure implicit and explicit waits.

Additionally, DevAssure's Advanced code blocks provide the capability to poll and wait for async operations to be completed.

Learn more about DevAssure's Advanced code blocks.

To experience how DevAssure can leverage API testing, please click the below button to sign up for the free trial.

🚀 See how DevAssure accelerates test automation, improves coverage, and reduces QA effort.

Ready to transform your testing process?